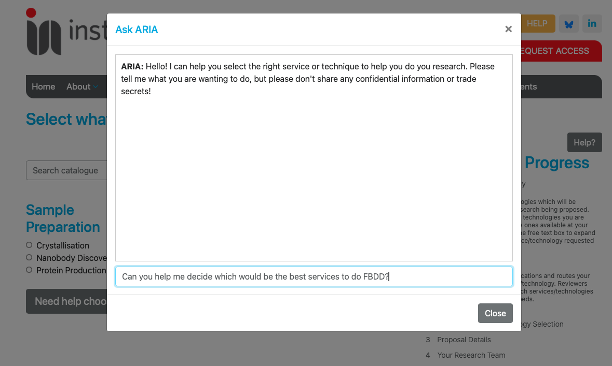

In my previous article, I speculated on using OpenAI’s LLM API for service selection at the day job. Idea being to guide a prospective researcher into the appropriate services from our catalogue to conduct their research.

I bashed something together on a frustrating Friday afternoon, and it seems to work quite well!

To accomplish this, I pre-load the chat with some instructions, e.g. “behave like an expert structural biologist with experience of all suitable technologies” which is surprisingly straightforward.

The secret sauce comes when it comes to loading the catalogue. Basically, I pull all the services and construct some JSON consisting of the names, the description (which is useful for understanding the limitations of the technology), and a “apply url”. I instruct the LLM to select appropriate technologies from the list, and then give me a valid application like where appropriate. These links will automatically create a new proposal in our access management system, ARIA, so that the user can directly apply.

I ask the LLM to consider alternative technologies that the researcher may not have considered, as well as pipelines where appropriate.

Initial tests are… very positive, I have to say!

This was of course an experiment, and there’s a question as to whether we roll this out on our live site (not least of which the European Commission is … touchy… right now about using US based LLMs), but… not bad for an afternoon’s flight of fancy.

Next, I’m going to possibly start looking at vector databases and how they could be applied to the statistical analysis of deposition metadata, but this might be better directed towards some lucky individual’s PHD thesis.

What a difference a year makes… and I ain’t dead (yet). Long time, no post. Suffice it to say it has been a very busy year, with a lot going on, both professionally and personally, both good and bad. But, I won’t go into that here.

What a difference a year makes… and I ain’t dead (yet). Long time, no post. Suffice it to say it has been a very busy year, with a lot going on, both professionally and personally, both good and bad. But, I won’t go into that here.