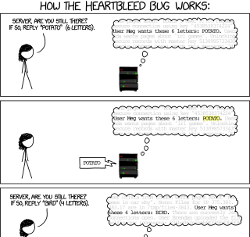

So, unless you have been living under a rock for the last few days, you would have heard about Heartbleed, the recently discovered OpenSSL security bug (go over to XKCD for the best explanation I’ve seen for how it works).

So, unless you have been living under a rock for the last few days, you would have heard about Heartbleed, the recently discovered OpenSSL security bug (go over to XKCD for the best explanation I’ve seen for how it works).

It was bad, really bad. As Bruce Schneier put it:

“Catastrophic” is the right word. On the scale of 1 to 10, this is an 11.

…And it was, very bad, but it could have been much much worse.

As it was, within a day or two of the bug being declared, the vast majority of affected servers were patched, and service users notified. We don’t know how often, if at all, this bug has been exploited, and we likely never will, but we can easily tell if it’s been fixed.

Bugs happen, but the only reason we know about Heartbleed at all is because OpenSSL is Open Source software. Open source allows you, and other third parties, to independently review and audit the code, something not possible with software from proprietary vendors.

Does software from Apple, Google or Microsoft contain similar ticking timebombs? Who knows, and we have no way of finding out. This is one of the many reasons why you should never trust closed source or proprietary security products. Ever.

Bluntly, anything where you can’t see the code can’t be audited, so can’t be trusted not to do something malicious, whether by accident or design. Trusting a product from a manufacturer purely on brand is a genetic fallacy.

What next

OpenSSL is an incredible project, and the community do a bang up job at keeping our communications safe from prying eyes. The fact that this bug, a simple buffer overflow error (a mistake every C programmer has made countless times in their career), was not spotted for almost two years is a little troubling, but those of us outside the project can’t really comment usefully on that.

Bugs happen, sometimes they’re serious, but the responsible action was followed and internet security is stronger for it. Patch and move on.

Talking specifically about OpenSSL, and open source in general, my thinking is that Heartbleed highlights a resource problem common with many projects which fall under the umbrella of being “infrastructure”. In that, it is largely built by volunteers (although also with large contributions from engineers paid to work on the project by their day job), and so sometimes there’s not enough bandwidth available to do everything, but by being “infrastructure”, doesn’t get so much of the attention as the latest wizzbang project on Hacker news.

My hope is that this bug will see more stakeholders taking an active interest, and so see a growth in the numbers of contributors and auditors. If every company who relied on OpenSSL paid one of their engineers to spend one day a month trying to break it, or audit the latest code, how many more person-hours this would give the project?

Another issue Heartbleed illustrates painfully well, is the danger of homogeneity. When the vast majority of the world use the same bit of software, it presents a single point of failure, and one error can have a massive impact.

OpenSSL has become the de-facto standard SSL/TLS implementation in use through merit, and while there is a very good case for not rolling your own crypto, I wonder if there is a case for encouraging and maturing different open source implementations of the protocol? Pros and cons on either side, but single points of failure should always be avoided…

Image “How the Heartbleed bug works” by XKCD