Part of my day job is writing and maintaining a fairly massive and complex piece of software which has become mission critical for various large scientific infrastructures around Europe.

For reasons that will be familiar to anyone who’s built platforms that suddenly become successful, certain things were left out when building the software. One of these was any kind of monitoring.

We are of course building this out “properly”, however one of the simple things I did early on turned out to be a really big win. This was to simply catch all fatal errors and exceptions being thrown, and then pipe them to a slack channel set up for the purpose. With the help of clerk, this can be made easier.

Before adding the slack monitoring we’d often be surprised by error – receiving garbled reports third hand as they were escalated from a user email, through the administration team, and to us. By which time the detail has been lost, and any logs long since rotated away.

Our devs live in slack, and as a multinational team who’s members frequently travel, this has become the nervous system of the organisation. Now, my team is no longer surprised, and can jump on issues instantly.

Very very simple to set up, but turned out to be a big win. Here’s how

First, capture fatal errors in your application

I wrote about this before, about capturing WSOD errors, but assuming you’re using PHP, this is all about registering an exception and error handler for your application.

Create a slack app

Next, create a slack application and add it to a monitoring channel in your work workspace.

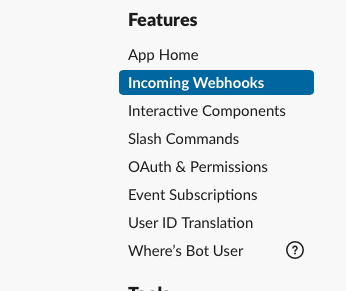

Add an “Incoming webhook” for your app, that posts to your monitoring channel. This will give you a URL, anything POSTed to which will end up in your channel.

Posted text supports Markdown for formatting, which is handy if you want to post raw error messages etc, or links to data dumps.

Link the two

Finally, from your error handler, POST the error / stack trace / etc to your slack channel.

Conclusion

Obviously, this should be no replacement for proper monitoring. Proper monitoring can provide historic information and statistics about the overall health of your platform.

However, in the absence of this… this may be a quick win that you can implement without too much effort. Certainly for us, this proved to be invaluable, and allowed us to quickly diagnose and fix faults we were previously unaware of.