Ok, so here’s an experimental, proof of concept plugin for Idno that provides OpenPGP encryption for form posts between web clients and the server (just in time for Reset the net 😉 ).

Ok, so here’s an experimental, proof of concept plugin for Idno that provides OpenPGP encryption for form posts between web clients and the server (just in time for Reset the net 😉 ).

It makes use of OpenPGP.js, a pure javascript implementation of the OpenPGP spec. On form submission, the plugin will encrypt all form variables, client side in the browser, before transmitting them to the server.

What this for

Primarily, this is aimed at (partially) addressing a situation where you have an Idno site sitting behind a load balancer/reverse proxy like Squid, Nginx or Pound.

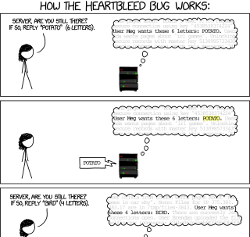

In this configuration it is common for the connection to be HTTPS only between the client and the load balancer node, at which point HTTPS is stripped and the connection to the back-end web server is conducted over HTTP. As we know from the NSA smiley, attacking this point where HTTPS is stripped at the load balancer was one of the ways the NSA and GCHQ was able to burgle customer data from Google’s cloud.

Using this plugin, the contents of the form will be encrypted with the back end server’s public key, meaning that the payload will remain encrypted as it transits through your data centre until it’s final destination, where, if you redesign your system as such, it could be stored in encrypted form and decoded only when necessary.

What this is NOT for

This is not intended to be a replacement for HTTPS.

Encrypting the form on the client does raise the bar slightly, making it much harder for a passive attacker to simply read your username and password as it travels over the wire. However, it does not protect against a more sophisticated attacker capable of launching a “Man in the middle“, or “Man on the side attack“.

Using HTTPS is important, because without it an attacker could insert their own public key into the mix, or modify the javascript sent.

Usage and limitations

The plugin currently piggybacks off of gnuPG to do the decryption on the server end, and so this requires you to perform a couple of configuration steps.

- Make sure you’ve got gnuPG installed. If the binary isn’t at at

/usr/bin/gpg, you can setopengpg_gnupgin yourconfig.ini - Generate a keypair for your web server user

su www-data gpg --gen-key

- Make sure that the

.gnupgdirectory is not accessible publicly, using a .htaccess or similar, since it’ll contain a secret key! - Get a copy of the public key

gpg --export -a "User Name", and save it asopenpgpPublicKeyin yourconfig.ini

Once you’ve done this, activate your plugin in your Idno settings, and you should be ready to rock and roll!

It was enjoyable playing with OpenPGP.js, and I can already think of some other cool uses for it (most obvious might be to enhance my OpenPGP elgg plugin).

Have fun!

So, unless you have been living under a rock for the last few days, you would have heard about

So, unless you have been living under a rock for the last few days, you would have heard about